The global AI in fashion market was valued at $270 million and is projected to grow at a compound annual growth rate of 36.9%, reaching $4.4 billion by 2027. A significant contributor to this growth is the emergence of AI-powered clothes changers, enabling virtual try-ons, and allowing consumers to see how clothes look exactly on them without physical fittings.

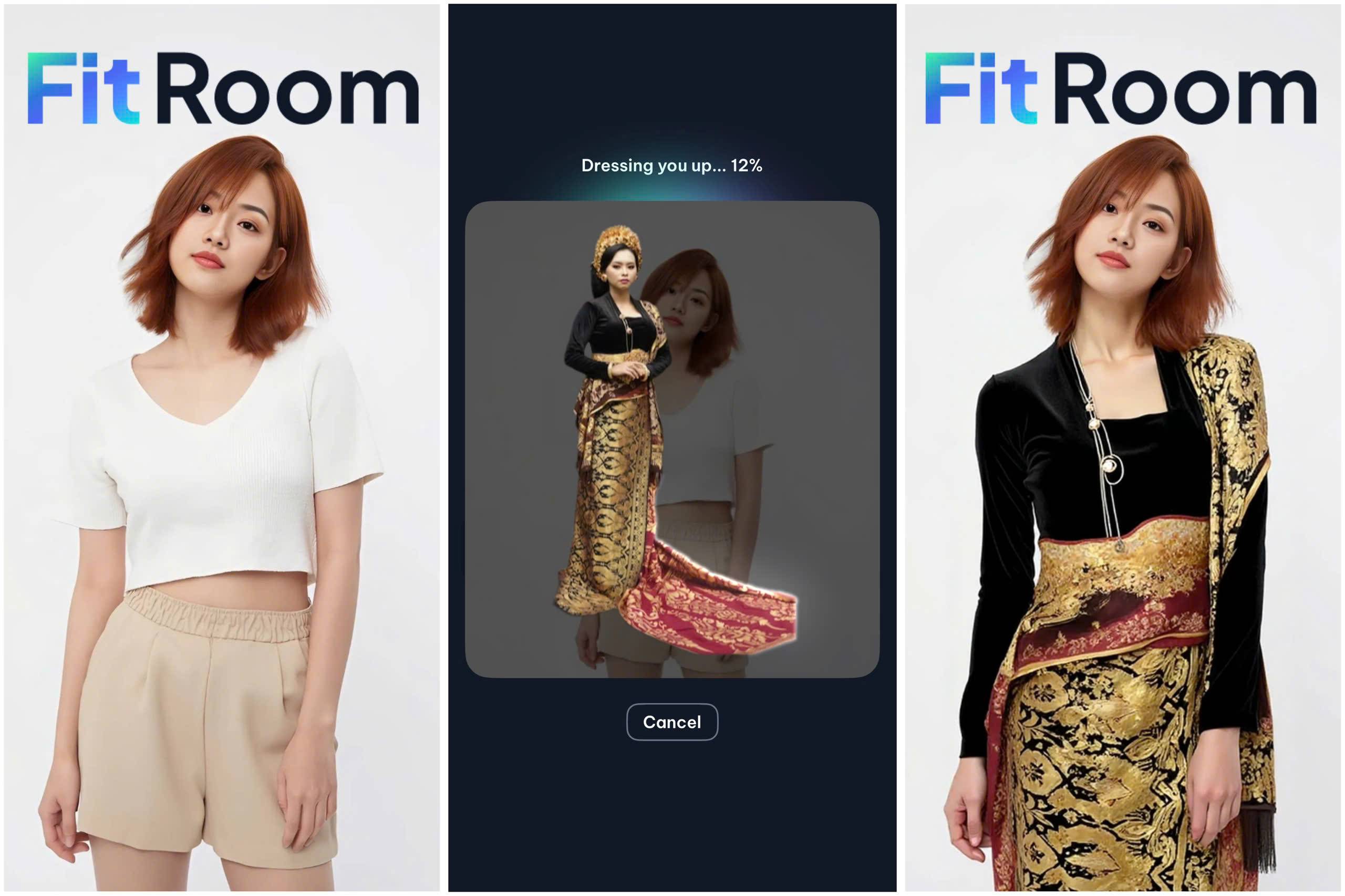

Among the leading AI clothes changers, FitRoom stands out as a cutting-edge solution that leverages advanced deep learning and computer vision models to create highly realistic outfit swaps. But how does this technology work? In this article, we’ll explore the AI models, deep learning techniques, and virtual try-on networks behind these innovations, along with how FitRoom compares to other AI clothes changers.

What technology does an AI clothes changer use?

AI clothes changers rely on a combination of deep learning, computer vision, and image processing techniques to generate realistic outfit swaps. These systems analyze clothing textures, fit, and alignment while ensuring that garments appear natural on the user’s body. Below are the key technologies that AI-driven virtual try-ons normally have.

AI models

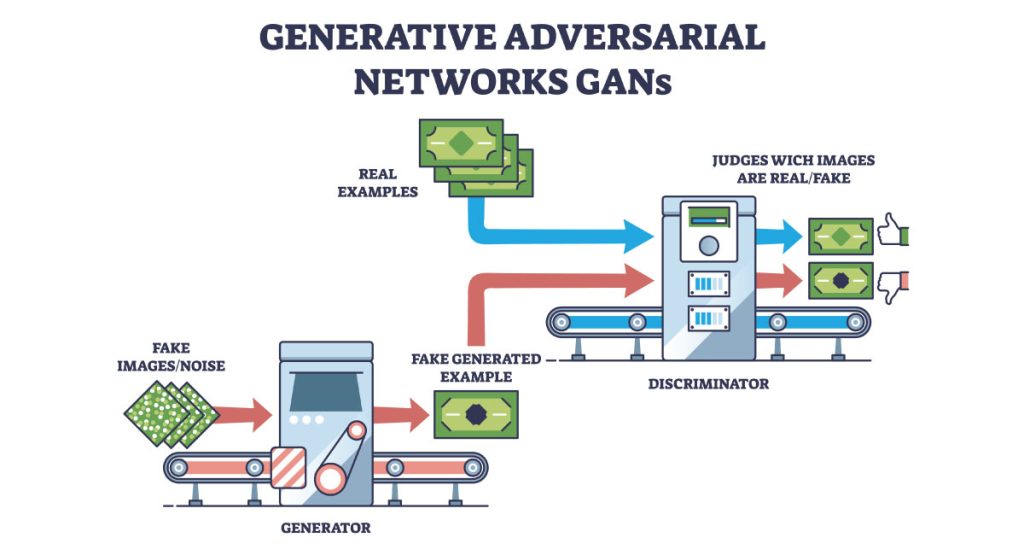

GANs help create realistic outfit swaps

Generative Adversarial Networks (GANs) are a core component of AI clothes changers, enabling realistic and seamless outfit transformations. GANs consist of two neural networks: a generator and a discriminator – that work together to refine synthetic images. The generator creates new clothing images, while the discriminator evaluates their authenticity, forcing the system to improve until the output looks indistinguishable from real photographs.

This technology ensures that AI-generated clothing matches real-world fabric textures, shadows, and folds, allowing for natural-looking virtual try-ons.

Innovative AI models like StyleGAN, Pix2Pix, and CycleGAN

Several advanced AI models enhance virtual outfit generation by refining clothing realism and adaptability:

- StyleGAN: Developed by NVIDIA, StyleGAN is known for generating highly detailed and customizable images. It helps create high-resolution outfit swaps while maintaining a user’s natural appearance.

- Pix2Pix: This model is particularly effective in image-to-image translation, making it ideal for transforming one clothing style into another while preserving body details.

- CycleGAN: Unlike Pix2Pix, which requires paired training data, CycleGAN can learn to swap outfits using unpaired datasets, making it useful for diverse fashion applications.

These AI models work together to produce smooth, high-quality transformations, ensuring that virtual clothes look as realistic as possible.

Deep learning

Deep learning plays a crucial role in AI clothes changers by enabling the system to analyze, understand, and manipulate clothing details in images. Multi-layer neural networks help AI models recognize textures, align garments correctly, and ensure realistic rendering.

Deep learning helps in analyzing clothing textures, fit, and alignment

AI virtual try-ons rely on deep learning to detect intricate fabric details such as folds, shadows, and material properties. By analyzing these features, the AI ensures that the new outfit looks natural and maintains realistic lighting and texture consistency.

For example, deep learning models can distinguish between a rigid denim jacket and a flowing silk dress, applying different transformations based on their physical properties. This helps avoid unrealistic distortions when swapping outfits.

Convolutional Neural Networks (CNNs) for image recognition & segmentation

Use cases of Convolutional Neural Networks (CNNs) for image recognition & segmentation

Convolutional Neural Networks (CNNs) are widely used for image recognition and segmentation, two essential processes in AI clothes changers:

- Image recognition: CNNs identify key elements in an image, such as body parts and clothing items, to determine where changes should be applied.

- Segmentation: These models separate the clothing from the person and background, ensuring that only the outfit is altered while the user’s body remains unchanged.

Popular CNN-based architectures, such as U-Net and Mask R-CNN, help AI clothes changers recognize clothing contours and apply realistic transformations without affecting non-clothing areas

Multi-layer neural networks help in rendering fabrics realistically

To make virtual try-ons look lifelike, AI uses deep multi-layer neural networks that model the complex behavior of fabrics. These networks account for:

- Fabric draping and stretchability: AI predicts how a piece of clothing would conform to different body types and poses.

- Light reflection and shading: Neural networks adjust clothing brightness and shadows to match the surrounding environment.

- Wrinkle and texture retention: AI ensures that fabric textures remain natural, whether the clothing is loose or tightly fitted.

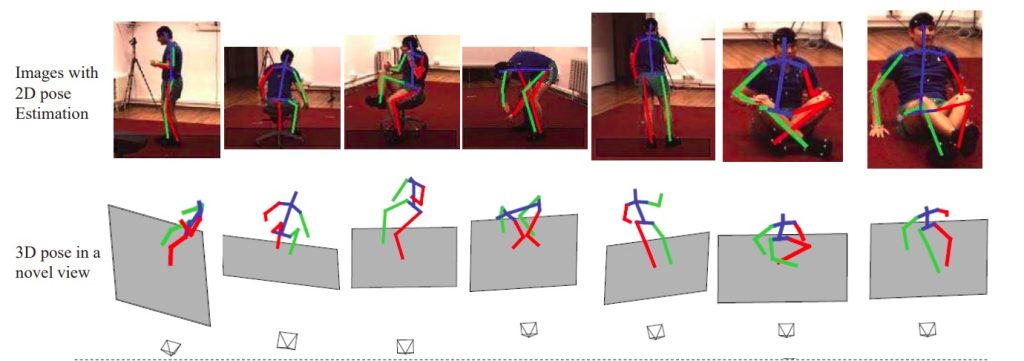

Pose estimation & body mapping

For AI clothes changers to create realistic outfit swaps, they must accurately understand the user’s body shape, posture, and movement. Pose estimation and body mapping technologies help AI track body alignment, ensuring that virtual garments fit naturally.

Pose estimation algorithms track body movements

Pose estimation is the process of detecting key body points, such as joints and limbs, to understand how a person is positioned in an image. Two popular pose estimation algorithms used in AI clothes changers are:

- OpenPose: A real-time multi-person system that detects the human body, hand, face, and foot key points. OpenPose helps AI identify a user’s posture and adjust clothing accordingly.

- DensePose: Developed by Facebook AI, DensePose maps every pixel of a human body in an image to a 3D model, allowing for more precise garment alignment.

These algorithms ensure that clothing moves naturally with the user’s body, preventing unrealistic distortions when changing outfits.

AI detects body shape, pose, and positioning to fit clothes naturally

AI clothes changers analyze a user’s body shape, proportions, and pose before applying outfit swaps. This involves:

- Identifying key points (shoulders, waist, hips, arms, legs, etc.) to understand the body’s structure.

- Adjusting clothing fit based on detected posture and angles (e.g., a jacket appearing different when arms are raised versus resting).

- Handling occlusions (hidden body parts) to maintain realistic clothing alignment, even when parts of the body are obscured.

By leveraging body shape detection, AI ensures that virtual clothing fits properly instead of looking flat or unnaturally stretched.

The role of 3D body modeling in improving accuracy

3D body modeling takes pose estimation a step further by reconstructing the user’s body in a virtual environment. This allows AI to:

- Create a personalized digital mannequin that closely matches the user’s real-world shape.

- Simulate fabric draping and movement for a more realistic clothing fit.

- Improve clothing physics by considering how different materials interact with body curves and motion.

Some AI clothes changers integrate 3D body scanning to enhance accuracy, providing users with an experience closer to in-store try-ons. By combining pose estimation, body mapping, and 3D modeling, AI clothes changers ensure that virtual outfits adapt naturally to different body types and movements.

Image-to-image translation & virtual try-on networks (VTON)

AI clothes changers use image-to-image translation to modify outfits while keeping the rest of the image intact. This technology ensures that clothing swaps look seamless, preserving the user’s body structure, facial features, and background details.

Image-to-image translation AI changes clothing while keeping body details intact

Image-to-image translation is a deep learning technique that allows AI to transform one visual element into another while maintaining essential image properties. In the case of AI clothes changers, this process:

- Replace the original outfit with a new one while ensuring it aligns with the user’s body.

- Preserve skin tones, hair, and accessories to avoid unnatural blending.

- Adapts lighting and shadows to make the new clothing look natural.

By using generative models and transformation networks, AI can swap clothing in a highly realistic way, making virtual try-ons more immersive and accurate.

TryOnGAN and CP-VTON (Clothing Parsing Virtual Try-On Network)

AI models like TryOnGAN and CP-VTON (Clothing Parsing Virtual Try-On Network)

Several AI models specialize in virtual try-on technology by improving clothing adaptation and realism.

- TryOnGAN – A GAN-based model designed to generate high-quality virtual clothing try-ons by realistically warping garments onto a user’s body.

- CP-VTON (Clothing Parsing Virtual Try-On Network) – This model enhances virtual try-ons by first segmenting clothing items and then replacing them with the desired outfit, ensuring accurate shape and fit.

Both of these models help AI clothes changers maintain high accuracy when swapping outfits, reducing distortions and improving garment positioning. AI models make AI clothes changers more effective than traditional virtual try-on.

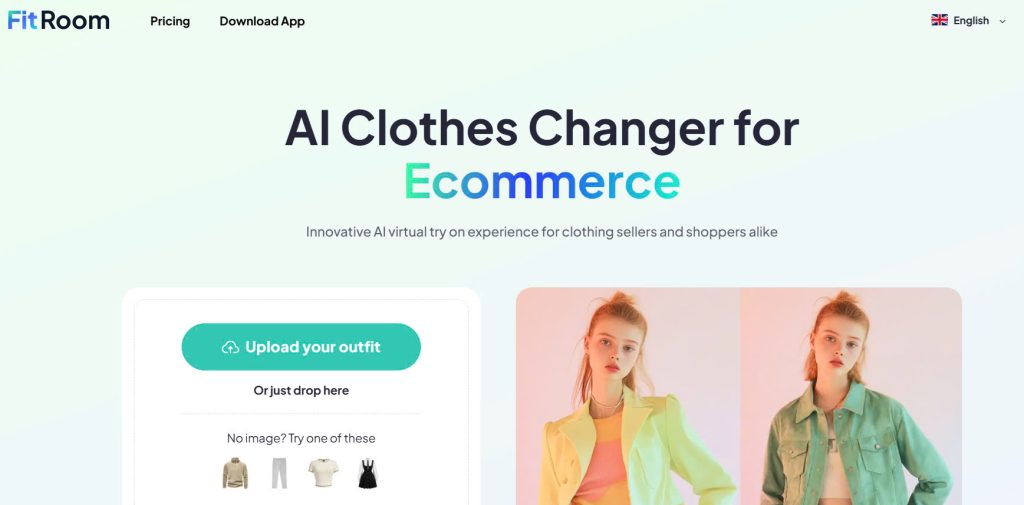

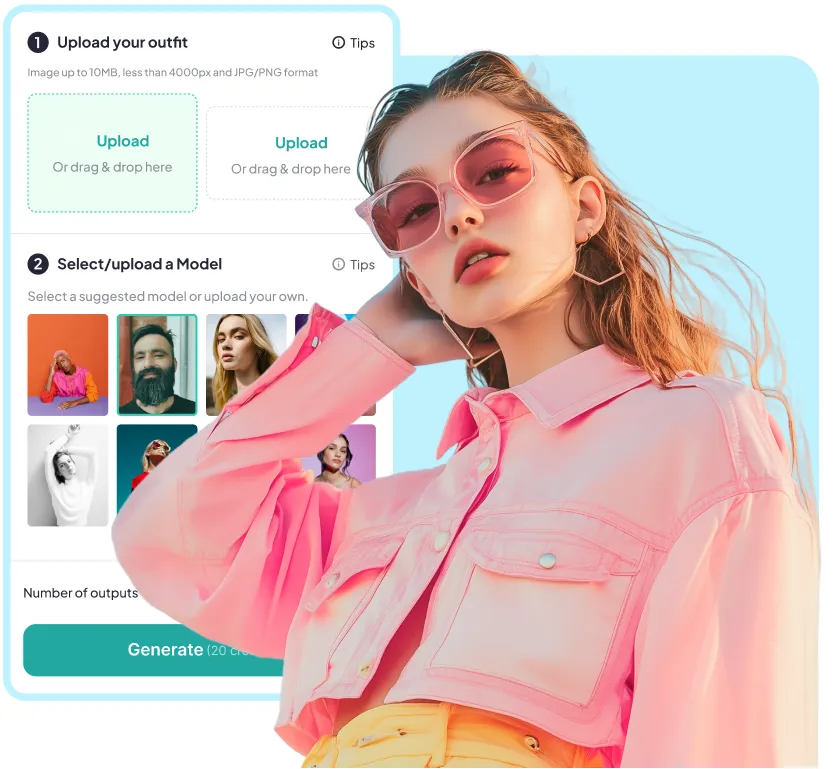

FitRoom – The leading AI clothes changer

FitRoom, a leading AI clothes changer, integrates advanced versions of these image-to-image translation models to offer superior virtual try-ons. FitRoom stands out as one of the most advanced AI-powered clothes changers, offering highly realistic virtual outfit try-ons.

AI clothes changer technology of Fitroom combines deep learning, pose estimation, and generative AI models to create seamless clothing swaps. FitRoom’s approach ensures that virtual outfits appear more natural, enhancing the overall user experience compared to basic AI-powered outfit generators.

FitRoom offers a more tailored and immersive virtual try-on experience compared to standard AI outfit generators

FitRoom integrates cutting-edge Generative Adversarial Networks (GANs) to generate lifelike outfit swaps. By training on vast datasets of clothing and human body images, FitRoom’s AI can:

- Seamlessly replace outfits without affecting body proportions or background details.

- Preserve fabric textures, shadows, and lighting for natural-looking clothing swaps.

- Adapt garments to various poses and body types, ensuring a personalized fit.

To enhance realism, FitRoom likely employs pose estimation algorithms (OpenPose, DensePose) and 3D body modeling to track body movements and positioning. These technologies help FitRoom ensure clothes fit naturally regardless of user posture.

Improve accuracy in outfit placement, prevent distortions, and enhance realism by considering body shape variations and garment draping physics.

FitRoom uses Convolutional Neural Networks (CNNs) and clothing parsing networks to separate garments from the body and background. This process includes accurate clothing segmentation to distinguish between different fashion items, fabric-aware rendering to maintain realistic textures and folds, and dynamic lighting adjustments to ensure color consistency and natural shadows.

Unlike traditional virtual try-on tools that may take several seconds to generate an image, FitRoom likely incorporates real-time AI processing, allowing users to instantly preview different outfits without delays; and interact with virtual clothing adjustments dynamically. And overall, experience a smooth and engaging virtual try-on session.

Through a combination of GANs, pose estimation, deep learning, and real-time processing, FitRoom sets itself apart as a leading AI clothes changer in the fashion industry.

Learn more: How to change clothes using AI

Comparison: FitRoom tech vs other AI clothes changers

FitRoom is one of many AI clothes changers in the market, but how does it compare to other virtual try-on solutions? Let’s look at the side-by-side comparison of FitRoom’s technology versus other AI-powered outfit generators.

Key differences between FitRoom and other AI clothes changers

| Features | FitRoom | Generic AI clothes changer |

| AI model used | Advanced GANs (e.g., TryOnGAN, CP-VTON variations) | Basic GANs or traditional deep learning models |

| Pose estimation | Uses OpenPose, DensePose, and 3D body modeling for accurate clothing fit | Some models use OpenPose but may lack advanced body mapping |

| Clothing segmentation | High-precision CNN-based segmentation for texture and fabric accuracy | Standard segmentation with occasional distortions |

| Fabric rendering | Retains realistic textures, wrinkles, and lighting consistency | May struggle with texture details, causing flat or stretched fabric |

| Real-time Processing | Faster AI processing, allowing instant outfit previews | Often slower, requiring longer processing time |

| Customization & interactivity | Allows dynamic outfit adjustments and multiple clothing layers | Typically offers basic outfit swaps with limited interactivity |

Why FitRoom stand out?

FitRoom’s use of advanced GANs and body mapping ensures more natural-looking clothing transformations. Unlike many AI outfit generators that struggle with complex poses, FitRoom integrates pose estimation and 3D modeling to ensure outfits fit naturally.

FitRoom excels at preserving texture details, making the clothing look more realistic compared to competitors. While some AI try-on tools take time to process, FitRoom leverages real-time AI to deliver instant previews.

For users looking for a quick and simple outfit swap, many AI clothes changers may suffice. However, for those who want highly accurate, realistic, and interactive virtual try-ons, FitRoom is among the top choices due to its superior AI technology.

The future of digital fashion: AI clothes changer

AI clothes changers have revolutionized the way people try on outfits virtually, combining deep learning, generative AI, pose estimation, and image-to-image translation to create realistic clothing swaps. By leveraging technologies like GANs, CNNs, OpenPose, and 3D body modeling, these systems ensure that virtual outfits align naturally with body shapes, postures, and movements.

Among the various AI-powered outfit generators, FitRoom stands out for its advanced use of real-time AI processing, high-precision fabric rendering, and dynamic pose adaptation. Compared to other virtual try-on solutions, FitRoom offers a more realistic, seamless, and interactive experience, making it one of the leading technologies in the fashion AI space.

As AI continues to evolve, virtual try-on tools will become even more accurate, immersive, and widely adopted, transforming the way consumers shop for clothing online. Whether for e-commerce, personal styling, or fashion innovation, AI clothes changers like FitRoom are paving the way for the future of digital fashion.

Reference: https://www.statista.com/statistics/1070736/global-artificial-intelligence-fashion-market-size/